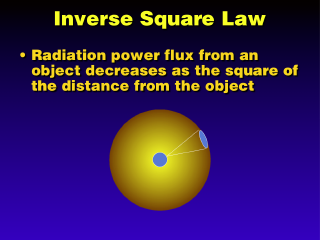

The intensity of the radiation from a point source of radiation decreases

as you get further away from the object. The Sun may have a radiative surface temperature of 6000 Kelvin,

which should result in a high intensity of emission, but by the time the expanding

shell of radiation reaches Earth 150 million kilometers away, the intensity

is greatly reduced (the energy flux at this distance, called the "solar

constant", is about 1380 Watts per square meter).

The intensity of the radiation from a point source of radiation decreases

as you get further away from the object. The Sun may have a radiative surface temperature of 6000 Kelvin,

which should result in a high intensity of emission, but by the time the expanding

shell of radiation reaches Earth 150 million kilometers away, the intensity

is greatly reduced (the energy flux at this distance, called the "solar

constant", is about 1380 Watts per square meter).

If we compute the amount of energy

the Earth actually intercepts from the Sun (based on the solar constant), and

we use the fact that the Earth has to radiate this same amount of energy away

(or else it would eventually burn up or freeze out), then we can compute the Earth's temperature using the

Stefan-Boltzmann Law, which relates

rate of radiation emission from a blackbody to its temperature. This calculation

results in an Earth surface temperature of 255 K.

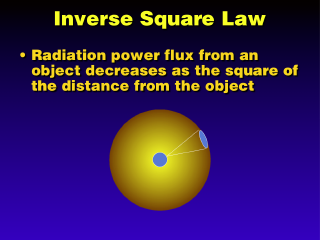

The intensity of the radiation from a point source of radiation decreases

as you get further away from the object. The Sun may have a radiative surface temperature of 6000 Kelvin,

which should result in a high intensity of emission, but by the time the expanding

shell of radiation reaches Earth 150 million kilometers away, the intensity

is greatly reduced (the energy flux at this distance, called the "solar

constant", is about 1380 Watts per square meter).

The intensity of the radiation from a point source of radiation decreases

as you get further away from the object. The Sun may have a radiative surface temperature of 6000 Kelvin,

which should result in a high intensity of emission, but by the time the expanding

shell of radiation reaches Earth 150 million kilometers away, the intensity

is greatly reduced (the energy flux at this distance, called the "solar

constant", is about 1380 Watts per square meter).